As scientists, many of us have read a paper, been inspired by the glamorous data, carefully followed the methods section in order to replicate the results in our own hands, and failed to validate the original results. I’ve often attributed these issues to my own inexperience and naiveté as a young scientist, but over the past several years, the irreproducibility of published data has become a widespread problem. This lack of reproducibility could be perceived as a manifestation of poor experimental design and faulty interpretation of results by researchers. However, this seems counterintuitive in that so much of a scientist’s reputation rests upon the quality of his or her publication record.

Just how rampant is the reproducibility problem?

A 2012 study led by C. Glenn Begley (then the head of cancer research at Amgen, Inc.) probed the boundaries of reproducibility in cancer literature by investigating 53 landmark publications from reputable labs and high impact journals. Despite closely following the methods sections of those publications, and even consulting with the authors and sharing reagents, Begley et al. found that the data in 47 of the 53 publications could not be reproduced; only 6 held up under scrutiny. A similar study performed at Bayer Healthcare in Germany replicated only 25% of the publications examined. These reproducibility issues do not only plague the clinical sciences. The field of psychology recently came under scrutiny during an effort called the ‘Reproducibility Project: Psychology.’ Of 100 published studies, only 39 could be reproduced by independent researchers. These facts are at once shocking, depressing, and infuriating, especially when considering preclinical publications that spawn countless secondary publications, which may lead to expensive and faulty clinical trials that inevitably fail. Unfortunately, the increasing number of flawed publications has led to a precipitous decline in the public’s trust in science and medicine.

What’s causing all of these issues?

There is an exorbitant amount of prestige in publishing high risk/high reward data that tell a “perfect story” from start-to-finish in a high impact journal. The very foundation of scientific progress is rooted in the generation of hypotheses based on observations, followed by the unbiased evaluation of these hypotheses through conscientious experimental design. This process should occur whether the data are positive or negative, and whether the findings prompt a groundbreaking paradigm shift or are simply just a confirmation of a previous study. However, it seems that we have lost our way. Landing a manuscript in a high impact journal is The Holy Grail instead of just a nice side effect to an important scientific discovery. This is how you get the Harvard postdoctoral position and become a successful faculty member at a world-renowned research institution. This is a large part of the rubric that determines whether you are awarded funds to continue your research. This is the currency of your career and the criteria on which you will be forever sized-up as a scientist. This, however, is also contributing to the increasing number of irreproducibility problems in scientific publications.

The pressure to publish quickly in a good journal and hype one’s data may cause some scientists to overinterpret or “massage” their data to appear more profound. However, it is my impression that most scientists are honest and truly believe both in the quality of their data and the rigor in which they performed their experiments. While there is certainly high pressure to publish in order to be successful, this is not the only issue at play.

So what else contributes to the lack of reproducibility in published work?

The answer to this question is manifold. Some reasons include poor oversight by extremely busy lab directors who may not have time to review all raw data, insufficient statistical training for graduate students and postdoctoral fellows, and the propensity of journals to eschew publishing “boring” studies with negative data. None of these reasons relate to a malicious agenda of scientists to mislead the public; they simply reflect human nature in that sometimes we are busy, distracted, tired, or just make mistakes.

What can we do to fix this problem?

The tide is changing, and many journals, such as Nature, are taking steps to mitigate reproducibility issues. Namely, they have abolished word restriction limits in the methods section so that authors can more thoroughly describe their experimental parameters. Additionally, journals have vowed to place greater attention on the usage of proper controls and on justification of a particular model. Authors will be encouraged to submit tables of raw data so that the journal, and the scientific community as a whole, may verify the appropriateness of statistical analysis and interpretation. In some cases, biostatisticians will be consulted. The journal, PLoS One, in collaboration with Science Exchange and figshare, has launched ‘The Reproducibility Initiative.’ The aim of this endeavor is to give scientists a platform for an independent party to replicate their study and afford them a ‘Certificate of Reproducibility’ if their data holds up.

Reproducibility is of monumental importance in preclinical studies that may beget clinical trials with human patients. Therefore, the NIH and National Institute on Drug Abuse (NIDA) are also weighing in on this issue. These institutions have devised a new checklist for the research strategy section of grants to ensure that proper consideration is given to experimental design, including controls, choice of animal models, statistical criteria used to eliminate bias, and methods of blinding samples. In addition, NIH has updated the format of its Biographical Sketch such that authors are encouraged to include all published and non-published contributions to science. This change was effected to limit the emphasis placed on high profile publications when it comes to receiving grant awards. Instead, greater regard will be given to applicants who may not have a Cell paper, but did design a protocol, patent a piece of scientific equipment, generate software, or develop educational aids. Hopefully, this assuages some of the fears that academic scientists have when applying for funding and results in less pressure to overhype data.

Still, some scientists argue that more needs to change before we can fix our irreproducibility problems. A possible solution would be to set aside funding for replication studies and reward investigators for engaging in them. Furthermore, two psychologists from the UK sparked controversy when they suggested that graduate students be required to replicate published data as part of their training. These ideas initially sound expensive and potentially lacking in scientific return. However, replication studies could hasten the self-correcting ebb and flow of science. Overall, these efforts would allow researchers to test results before other investigators become mired in costly blunders that hinge upon unsubstantiated published data.

Of course, in solving the irreproducibility problem, likely the best we can hope for is incremental progress. Antibodies don’t always work as advertised. Reagents aren’t always as pure as we would like. The tools we have to work with are often limiting. People make mistakes without knowing it. Sometimes cells seem to behave differently on a Wednesday afternoon than on a Friday morning for reasons we do not yet understand. All of these can affect our data and interpretations, and people need to realize that negative data can be just as informative as positive data that tells a neat story. Finally, the attitude that Cell, Science, and Nature papers are the main basis on which our scientific prowess is judged has got to go. Together we can work towards solving these problems to publish only the most reproducible studies, regain the public’s trust in science, and continue answering new and interesting questions.

PEER EDITED BY Nuvan Rathnayaka AND JONATHAN SUSSER

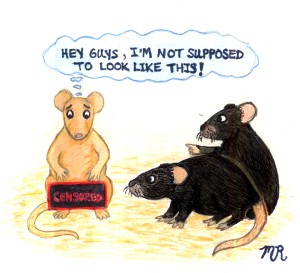

Illustration by Meagan Ryan

Follow us on social media and never miss a Science News story:

This article was co-published on the TIBBS Bioscience Blog.