The Era of Artificial Intelligence

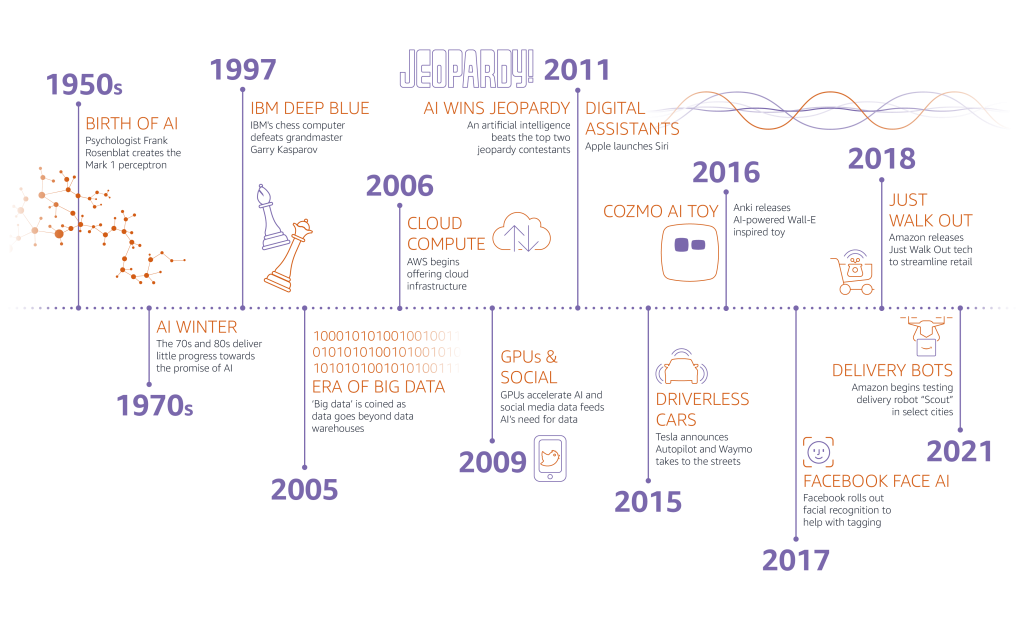

Artificial intelligence (AI) has developed exponentially in recent years, infiltrating our society from automated medical screenings to TV show recommendations on streaming services. Since its creation with Alan Turing’s decryption Enigma Machine during WWII, AI has been slowly improving our lives, with uses in language translation, industrial machinery, and gaming (see Figure 1 for a timeline of AI advancement).

Whatever your opinion of AI, we have to acknowledge that it represents a major advancement in technology that will eventually spread into almost every aspect of our day-to-day lives. When we first dialed into the World Wide Web back in 1989, we could not fathom that it would create a globally interconnected world, leading not only to the widespread exposure of cultures and ideas but also to misinformation and exploitation. Thus, with every big technological advancement, we should consider and prepare for their potentially irreversible impacts on society. AI may have a profound effect in academia, not only in the classroom but in the fundamental integrity of scientific writing and discoveries. If compromised, the consequences could be felt on a global and generational scale.

Academic Integrity in Society

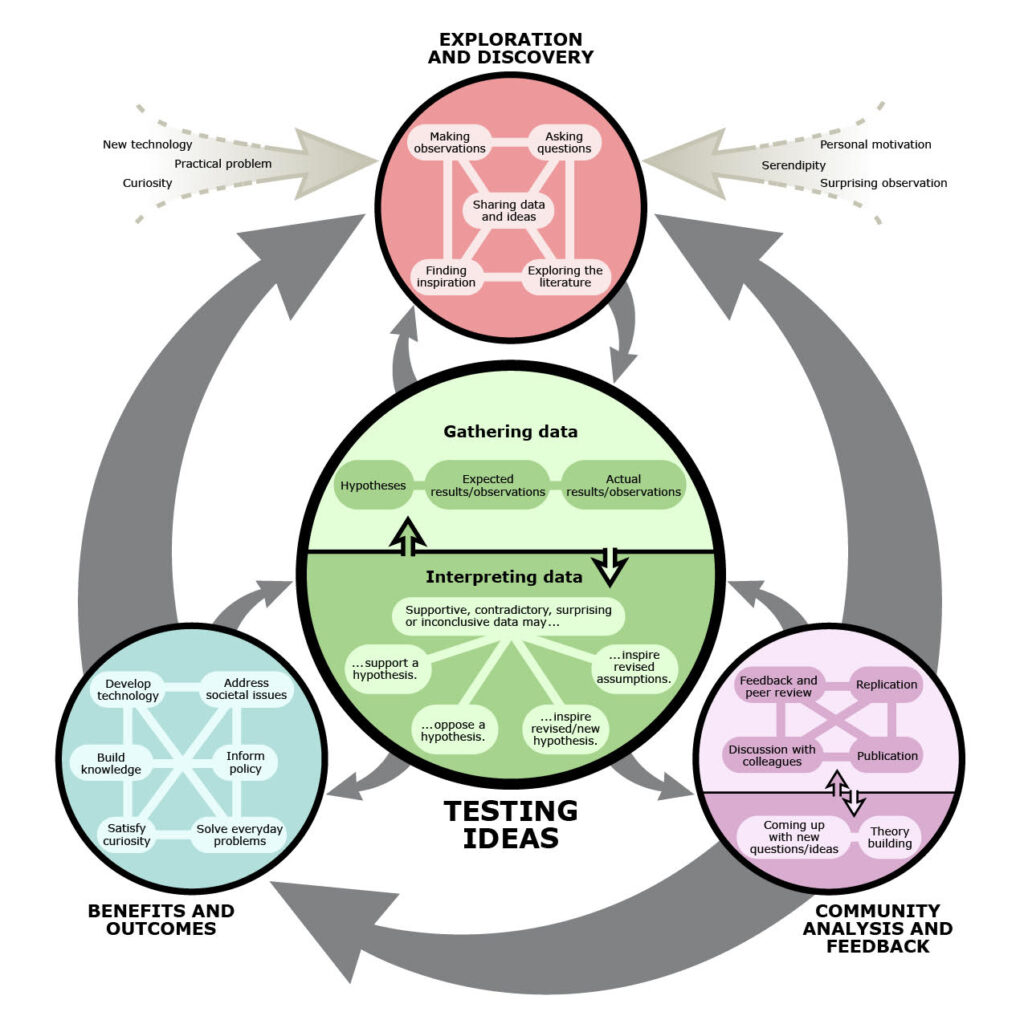

Academia in universities and research institutions (such as UNC-Chapel Hill and NASA) is established on the pursuit of knowledge and is dedicated to the generation and dissemination of scientifically rigorous, reproducible, and peer-reviewed discoveries. Scientifically rigorous studies include questions and hypotheses founded on previous studies; data collected and analyzed in a systematic and unbiased manner, with results clearly presented; and conclusions supported by the evidence. All of these factors of scientific study contribute to the integrity and validity of its investigations. One key aspect of ensuring academic integrity is the ability to be reproduced and supported by future and previous studies. Thus arises a need for a transparent presentation of all methodologies and interpretations.

Once knowledge is generated in these academic studies, it must then be disseminated to the scientific community, decision-makers, and the general public. To ascertain the same level of trust we place in a meteorologist’s weather prediction, scholarly studies are presented through peer-reviewed academic journals. The peer-review process is what every scientific paper must go through before being published, and it allows scientific peers in their field to provide feedback and reviews of the study’s integrity and findings. From hypothesis to conclusion, academia has a collaborative culture with protocols in place to ensure the legitimacy of a study (see Figure 2 for a flowchart of the scientific process). So with direct decision-making applications to inform policy and address societal issues, our collective confidence in the academic process is a key factor in its longevity and success.

Detecting Plagiarism, Fabrications, and Falsifications

Plagiarism and theft of intellectual credit, as well as the presentation of fabricated and/or false data, are the “unholy trinity” of academic misconduct. However, these acts can be committed under intentional or unintentional circumstances: from publishing pressures, authorship gifting, improper citing of sources, and/or accidental data errors. In any case, academic misconduct can undermine the field, hurt public opinion, and lead researchers down costly and time-consuming detours.

Since academia’s conception, protocols for detecting plagiarism and fabrication have been implemented at all major levels. Peer reviewers and editors, as discussed above, are a crucial line of defense in detecting discrepancies and anomalies based on their professional expertise. At the same time, journals and institutions rely on whistleblowers, concrete evidence, and thorough investigations when allegations of ethical misconduct arise. However, these traditional methods of detection can be limited by accessibility, resources, and time.

AI is continuing to expand the speed and accuracy with which we can detect plagiarism and fabrications. First implemented in the 1900s with automated comparative analysis and pattern recognition software, AI detection has improved our ability to monitor and screen the academic integrity of the text, citations, images, and datasets.

Ethical Considerations of Using AI in Academia

Using AI to quicken tasks, conduct literature reviews, develop materials, and devise outlines for academic writing should be done with just as much scholarly intuition and judgment as any other resource or tool. Currently, AI is rooted in the information it is presented and thus does not make original conclusions. Compared to academic experts in their fields, who each have a unique perspective and background that lends to their original contributions, AI inherently uses the words of others. Therefore, AI should be seen by researchers as a tool to support arguments and not to illuminate discoveries. However, even with the rise of AI detection, the tried-and-true methods will always be academia’s cornerstone of integrity.

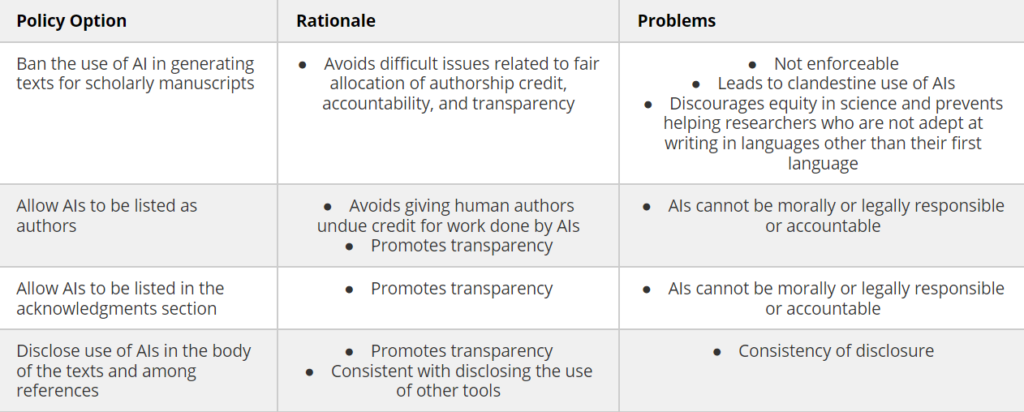

We must also be upfront about, and enforce policies on, the use of AI detection in academia. While we rely heavily on individual forthcomings and reviewer intuitions, automated methods are the “first responders” of misconduct detection. However, in some cases, automated detection processes have produced false positives that significantly affect the careers of innocent researchers and students. Therefore, policies should be in place to not only protect the individual, but also the research itself, as AI can currently not be held responsible for any breach of conduct (see Table 1 for the pros/cons of potential AI policy). d screen the academic integrity of the text, citations, images, and datasets.

Where Do We Go From Here?

While we can’t perfectly predict how AI will affect academia as a whole in the coming decades, we can acknowledge and prepare for how to ethically interact with it. However, this preparation could look different at each level of academia. Individual researchers and laboratory groups can develop ethical skills and intuitions when working with AI toward their specific research goals. Institutions can develop and implement holistic workshops, coursework, and best practice guidelines that have interdepartmental applications. Additionally, journals can take preventative screening measures and require authors to explicitly state whether or not (and to what extent) AI was used in the production of their study. Here are a few steps we could take to ensure the integrity of AI use:

Academics

- Use AI as a tool to support, not a tool to illuminate

- Check source material and verify facts presented by AI before incorporating

- Keep account and records of all AI use in research

Institutions

- Host workshops on ethically conducting research and mentoring in the era of AI

- Host workshops on recognizing and preventing academic misconduct from major to minor infractions

- Develop coursework for undergraduate and graduate students to understand the ethics and best practices of using Artificial Intelligence

Journals

- Use automated plagiarism, pattern recognition, and fact-checking software to screen submissions before and after the peer-review process

- Use AI to match submissions to the best-suited experts in their field as reviewers

- Require an Artificial Intelligence Statement for transparent detailing of AI contributions (outlines, coding, rephrasing, etc.) in both article submission and peer-review processes

Moving forward, there are protocols and best practices academics can implement for maintaining academic integrity when using AI, and this discussion warrants further exploration. However, academia is only one part of AI use in the modern age, and broader discussions on the ethics and standards set for the creation and development of AI tools should be expressed often and publically. If academics are going to use these third-party tools, they should hold them to the same level of rigor and transparency that they hold themselves to.

Artificial Intelligence Statement

I would like to acknowledge the contribution of ChatGPT (version 3.5), a language model developed by OpenAI (https://openai.com/), in providing direction for this article and its guiding questions. I would also like to acknowledge the contribution of Duet AI developed by Google (https://cloud.google.com/duet-ai) in creating this article’s featured image, prompt: ‘a futuristic robot reading a generic scientific article on an old school computer’ with a ‘background’ image style. These models were accessed between January and February 2024.

Peer Editors: Nicole Gadda & Savannah Muron